Safe Multi-Agent Reinforcement Learning for Behavior-Based Cooperative Navigation

Authors:

M. Dawood, S. Pan, N. Dengler, S. Zhou, A. Schoellig, M. BennewitzType:

ArticlePublished in:

IEEE Robotics and Automation Letters (RA-L) and presented at the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)Year:

2025Links:

BibTex String

@article{dawood2025ral,

title={Safe Multi-Agent Reinforcement Learning for Behavior-Based Cooperative Navigation},

author={Dawood, Murad and Pan, Sicong and Dengler, Nils and Zhou, Siqi and Schoellig, Angela P and Bennewitz, Maren},

journal={IEEE Robotics and Automation Letters},

year={2025}

}

Abstract:

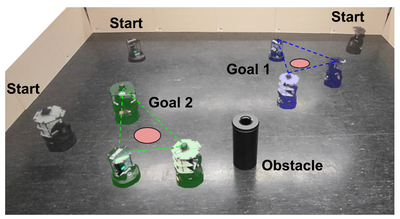

In this paper, we address the problem of behavior-based cooperative navigation of mobile robots using safe multi-agent reinforcement learning (MARL).Our work is the first to focus on cooperative navigation without individual reference targets for the robots, using a single target for the formation's centroid. This eliminates the complexities involved in having several path planners to control a team of robots.To ensure safety, our MARL framework uses model predictive control (MPC) to prevent actions that could lead to collisions during training and execution.We demonstrate the effectiveness of our method in simulation and on real robots, achieving safe behavior-based cooperative navigation without using individual reference targets, with zero collisions, and faster target reaching compared to baselines.Finally, we study the impact of MPC safety filters on the learning process, revealing that we achieve faster convergence during training and we show that our approach can be safely deployed on real robots, even during early stages of the training.