Integrating One-Shot View Planning with a Single Next-Best View via Long-Tail Multiview Sampling

Authors:

S. Pan, H. Hu, H. Wei, N. Dengler, T. Zaenker, M. Dawood, M. BennewitzType:

PreprintPublished in:

ArXiv preprintYear:

2023Related Projects:

AID4Crops, Embodied AI at LAMARRLinks:

BibTex String

@article{pan2023one,

title={Integrating One-Shot View Planning with a Single Next-Best View via Long-Tail Multiview Sampling},

author={Pan, Sicong and Hu, Hao and Wei, Hui and Dengler, Nils and Zaenker, Tobias and Bennewitz, Maren},

journal={arXiv preprint arXiv:2304.00910},

year={2023}

}

Abstract:

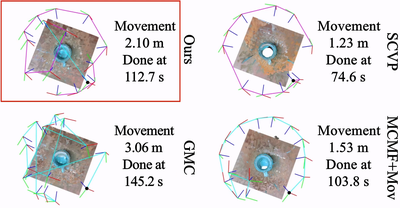

Existing view planning systems either adopt an iterative paradigm using next-best views (NBV) or a one-shot pipeline relying on the set-covering view-planning (SCVP) network. However, neither of these methods can concurrently guarantee both high-quality and high-efficiency reconstruction of 3D unknown objects. To tackle this challenge, we introduce a crucial hypothesis: with the availability of more information about the unknown object, the prediction quality of the SCVP network improves. There are two ways to provide extra information: (1) leveraging perception data obtained from NBVs, and (2) training on an expanded dataset of multiview inputs. In this work, we introduce a novel combined pipeline that incorporates a single NBV before activating the proposed multiview-activated (MA-)SCVP network. The MA-SCVP is trained on a multiview dataset generated by our long-tail sampling method, which addresses the issue of unbalanced multiview inputs and enhances the network performance. Extensive simulated experiments substantiate that our system demonstrates a significant surface coverage increase and a substantial 45% reduction in movement cost compared to state-of-the-art systems. Real-world experiments justify the capability of our system for generalization and deployment.