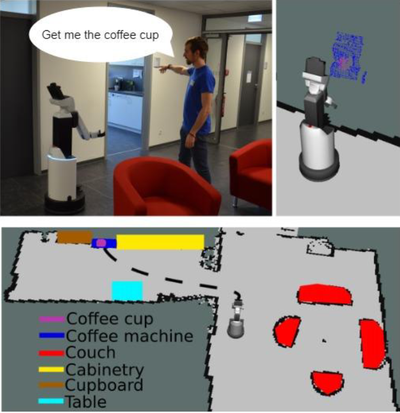

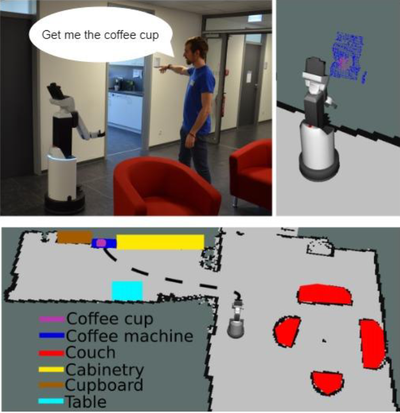

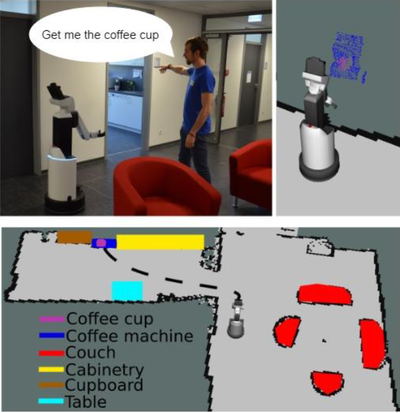

Coupled Active Perception for Mobile Manipulation in Unknown Environments

We propose a new approach where the actively controllable cameras on the MoMa actively perceive the objects and makes an initial guess of the environment. Using this information, the mobile manipulator decides whether to perform exhaustive search on current location, move to next location for initial mapping, or to plan the steps for grasping. We hypothesize that such a coupled active perception reduces the mapping time and also assists the system in reliable grasping.